The pipeline definitely succeeded, but most importantly, all of the data appears to be in the right place. You can either run Filebeat without the pipeline setting and then just grab an unprocessed document from Elasticsearch, or you can run Filebeat with the console output enabled, by commenting out the Elasticsearch section and adding the following to the yml file:

Now that we’ve got our ingest pipeline configured, let’s test and run it with the simulate API. I can just query my index for any documents that have error set and can then debug with the simulate API.

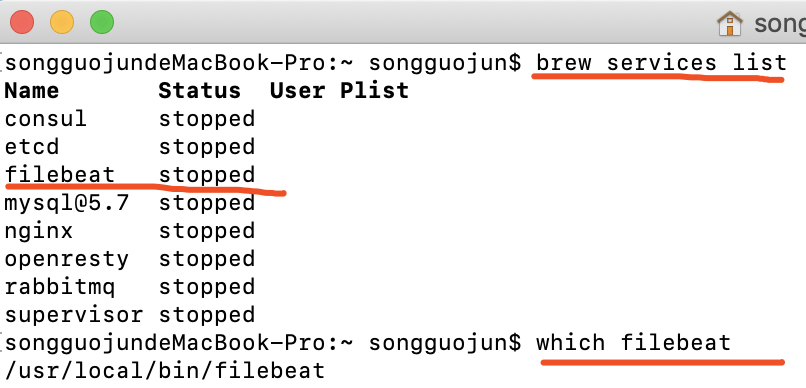

#Install filebeats in openvpn zip#

You also may notice the second grok processor, which is just there to deal with two different ways that zip codes were entered in this data set.įor debug purposes, I included a general on_failure section which will catch all errors and print out which type of processor failed and what the message is that broke the pipeline. For data with many more columns, the grok processor can get pretty hairy, so another option is to use the split processor and some painless scripting to process the line in a more iterative fashion. I used a grok processor to do the heavy lifting, since each row only had a few columns. Unlike Logstash, the ingest pipeline does not (at the time of this writing) have a csv processor/plugin, so you’ll need to transform csv’s yourself. "value" : " - Error processing message - " "description" : "Convert Restaurant inspections csv data to indexed data", Here’s a pipeline that can do all of that: Convert the inspection score to an integer.Split the csv content into the correct fields.The main tasks the pipeline needs to perform are: Now that we have the input data and Filebeat ready to go, we can create and tweak our ingest pipeline. Also, make sure you’ve got your system’s IP added to your cluster’s ACLs if you haven’t already done so. If you’re on the ObjectRocket service, you can just grab the output snippet from the “Connect” tab in the UI, which will come prepopulated with all of the right hosts, and just add the pipeline line and fill in your user and password. Looking at the Elasticsearch output section, it’s the standard Elasticsearch settings with a small addition of the name of the pipeline that you’d like to use with the pipeline: directive.

If your data is cleaner and sticks to a simple line per entry format, you can pretty much ignore the multiline settings. From there, Filebeat will just queue up any unmatched lines and prepend them to the final line that matches the pattern. Since the formatting for this data set is not super strict, with inconsistent use of double quotes and a number of newlines sprinkled in, the best option was to look for the end of an entry, which consists of a numeric ID followed by an inspection type without much variation or double-quotes/newlines. The only parts I’ll call out specifically are the multiline bit and the Elasticsearch configuration piece.

#Install filebeats in openvpn how to#

# Optional protocol and basic auth credentials.Įverything’s pretty straightforward here you’ve got a section to specify where and how to grab the input files and a section to specify where to ship the data. # Identifies the last two columns as the end of an entry and then prepends the previous lines to it # Ignore the first line with column headings For our scenario, here’s the configuration that I’m using. Once you’ve got Filebeat downloaded (try to use the same version as your ES cluster) and extracted, it’s extremely simple to set up via the included filebeat.yml configuration file. The first step is to get Filebeat ready to start shipping data to your Elasticsearch cluster. As you’re about to see, Filebeat has some built-in ability to handle multiline entries and work around the newlines buried in the data.Įditorial Note: I was planning on a nice simple example with few “hitches”, but in the end, I thought it may be interesting to see some of the tools that the Elastic Stack gives you to work around these scenarios. (30.422862, -97.640183)",10051637,Routine InspectionĭOH… This isn’t going to be a nice, friendly, single line per entry case, but that’s fine. Wieland Elementary,78660,100,"900 TUDOR HOUSE RD Restaurant Name,Zip Code,Inspection Date,Score,Address,Facility ID,Process Description

0 kommentar(er)

0 kommentar(er)